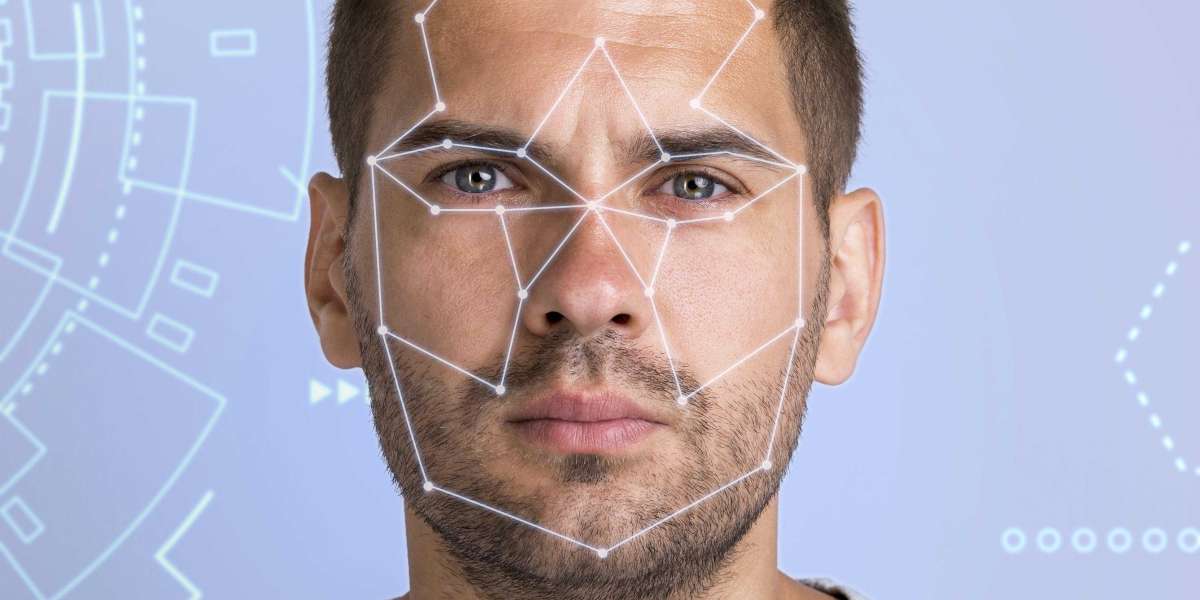

As artificial intelligence continues to evolve, it’s becoming more emotionally intelligent learning to interpret not just what people do, but how they feel. At the core of this emotional recognition lies the facial action coding system, a framework that decodes subtle facial muscle movements and connects them to emotional states.

Originally used in psychological research, facial expression analysis has been embraced by AI and machine learning for its ability to convert facial expressions into actionable data. In an increasingly digital world, where machines must understand human nuances, this system plays a critical role.

What Is the Facial Action Coding System?

The facial action coding system (FACS) is a method that categorizes every facial movement based on specific muscle activity, known as action units. By observing combinations of these units, machines and researchers can determine emotional expressions such as happiness, anger, fear, or surprise.

Unlike systems that rely on pre-labeled emotional categories, FACS focuses on the building blocks of expression. This makes it especially useful in machine learning, where precision and pattern recognition are key.

Why Emotional Recognition Matters in AI

Understanding emotion is essential for building AI that interacts naturally with humans. From virtual assistants to automated healthcare solutions, emotion-aware systems must go beyond logic—they must also recognize and respond to human feelings.

The facial expression analysis allows AI to interpret emotional expressions from live video or static images. By breaking down expressions into micro movements, it gives machines a structured way to perceive human emotion in context.

This capability is enhanced further when paired with AI-based emotion detection, which helps AI classify emotions in real time.

Enhancing Emotion AI with Micro Expression Analysis

Emotions are often expressed through quick, involuntary facial movements known as micro expressions. These last for just a fraction of a second but reveal genuine emotional reactions that people may try to suppress.

Integrating micro expression analysis with facial expression analysis gives AI tools a new level of emotional depth. It enables the detection of subtle cues like tension, discomfort, or joy that aren't always expressed verbally. This is especially valuable in fields like negotiation training, therapy simulations, and user experience testing.

By catching what the eyes might miss,Facial Reflex Interpretation adds precision to emotional recognition systems.

The Role of Behavioral Response Measurement

Emotion doesn’t stop at the face it influences how people behave. That’s why Behavioral Pattern Analysis is often used alongside facial analysis to observe how people react to specific stimuli.

By tracking how expressions change during tasks such as product testing or digital navigation—AI systems can identify frustration, confusion, or satisfaction. The facial action coding system helps break these reactions down into measurable expressions, while Engagement Response Evaluation connects them to real-world actions.

Together, they create a comprehensive view of emotional engagement, helping developers, marketers, and researchers refine their solutions.

Applications Across Key Industries

As machine learning becomes more integrated into everyday systems, the use of facial coding technologies is expanding rapidly.

1. Education

Online learning platforms can analyze student expressions to detect engagement or confusion, helping improve course delivery.

2. Mental Health

Therapeutic tools using facial tracking and Emotion detection can monitor signs of distress or mood shifts, especially in non-verbal patients.

3. Customer Experience

Retail and service-based platforms use facial feedback and Behavioral Feedback Analysis to gauge satisfaction or frustration, enabling real-time improvements.

4. Security and Law Enforcement

In high-stress environments, combining Subtle Facial Cue Detection with facial coding can reveal hidden emotions during interviews or screenings.

Challenges in Emotion-Reading AI

While the facial action coding system offers precision, it’s not without limitations. Emotions are influenced by cultural, social, and individual factors. One expression may not mean the same thing in every context.

Moreover, interpreting facial data without user consent raises ethical and privacy concerns. Systems using User Behavior Monitoring and emotion tracking must ensure transparency and data security to maintain user trust.

Developers need to design models that are not only accurate but also fair and inclusive, accounting for a wide range of expressions across different demographics.

The Road Ahead for Facial Analysis

The future of facial expression analysis in AI lies in real-time, adaptive interactions. As machine learning algorithms become more advanced, systems will be able to learn emotional cues from diverse datasets, adapt responses instantly, and provide more human-like interactions.

Paired with tools like AI-based emotion detection and enriched by Involuntary Expression Tracking, this technology will move from static assessment to dynamic engagement. In real-world use, AI will not just detect emotionit will respond with empathy, making human-computer interaction more natural and impactful.

Conclusion: Emotionally Intelligent Machines Start with Facial Coding

As AI systems move toward more natural interaction, emotional understanding will define the next generation of intelligent solutions. The facial action coding system serves as a bridge between expression and interpretation, enabling machines to perceive human emotion with clarity and precision.

Supported by micro expression analysis, AI-based emotion detection, and behavioral response measurement, facial coding empowers AI to move beyond transactions into relationships. The future is not just about machines that compute it’s about machines that connect.

FAQs

1.What is the facial action coding system used for in AI?

The facial action coding system is used to analyze facial muscle movements and understand emotional expressions. In AI, it helps machines recognize and respond to human emotions by decoding these expressions in real time.

2.Can AI detect micro expressions using facial coding?

Yes, AI can detect micro expressions by using facial expression analysis. These brief facial movements reveal genuine emotions, and when combined with advanced analysis tools, they help machines pick up on subtle emotional cues.

3.How does behavioral response measurement work with facial coding?

Behavioral response measurement works by observing how facial expressions change during specific tasks. Using facial expression analysis, AI can link these emotional reactions to user behavior, helping improve interaction and user experience.

4.Is facial expression analysis accurate across all cultures?

While the facial action coding system is widely used, facial expressions can vary across cultures. Developers need to train AI models on diverse datasets to improve accuracy and avoid misinterpretation.

5.Why is emotional detection important in machine learning?

Emotional detection helps AI interact more naturally with people. By understanding how users feel, machines can offer better support, enhance user satisfaction, and create more human-like interactions.